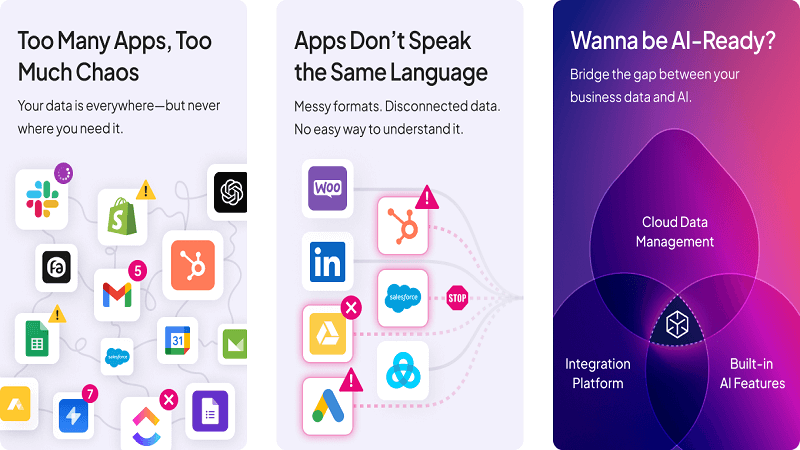

Every enterprise generates massive volumes of information daily—customer interactions, financial transactions, operational metrics, product inventories. Yet when organizations attempt to deploy AI systems that could transform this data into competitive advantage, they hit an invisible wall. The culprit? Data that wasn’t designed for intelligence.

The hidden cost of unprepared infrastructure

Research consistently shows 80-90% of AI initiatives fail before reaching production. The reason isn’t inadequate algorithms or insufficient computing power. Organizations fail because their data foundation cannot support what AI demands: clean, structured, real-time information accessible across every system.

When AI consumes fragmented data from disconnected platforms—each with different formats, inconsistent field names, and conflicting records—the results range from misleading insights to outright dangerous decisions. Companies report losing $12.9 million annually on average from poor data quality alone, before even attempting AI implementation.

Three characteristics that separate ready from unprepared

AI-ready data exhibits specific attributes that traditional business intelligence never required:

Unified structure means information follows consistent schemas regardless of source system. When your CRM, ERP, and marketing platforms define “customer” differently, AI cannot build coherent intelligence. True readiness requires standardization at the storage layer, not just during analysis.

Real-time synchronization ensures AI works with current reality rather than yesterday’s snapshot. Models trained on stale data make outdated recommendations. Batch processing that updates nightly cannot support conversational AI interfaces or autonomous decision systems that users now expect.

Comprehensive enrichment fills gaps AI would otherwise interpret incorrectly. Missing values, incomplete records, and unvalidated fields cause models to hallucinate or generate biased outputs. Preparation includes automated validation, deduplication, and augmentation before AI ever sees the data.

Why traditional approaches create bottlenecks

Most organizations attempt readiness through point solutions—a data warehouse here, an ETL tool there, manual cleaning processes everywhere. This fragmented approach generates new problems faster than it solves existing ones.

Data scientists report spending 60-80% of project time on preparation rather than model development. Teams build custom pipelines for each AI use case, creating technical debt that makes scaling impossible. When business units deploy their own solutions independently, the resulting data chaos undermines any attempt at enterprise-wide AI strategy.

Security and governance suffer particularly. When preparation happens ad-hoc across multiple tools and teams, tracking data lineage becomes nearly impossible. Compliance officers cannot verify what information AI models accessed or how transformations affected sensitive fields.

The platform approach changes everything

Organizations achieving AI success at scale share a common characteristic: they treat data readiness as infrastructure, not a series of projects. Rather than preparing data separately for each AI initiative, they establish continuous preparation as a core capability.

This platform thinking centralizes previously scattered activities. Automated pipelines ingest from all source systems, apply standardization rules consistently, resolve conflicts through intelligent reconciliation, and maintain a constantly updated repository that any AI application can access.

Built-in enrichment tools leverage AI itself to improve data quality—using machine learning to detect anomalies, predict missing values, and flag inconsistencies automatically. What previously required manual review now happens continuously in the background, maintaining quality without human bottlenecks.

From reactive cleanup to proactive preparation

The shift from project-based to platform-based readiness fundamentally changes organizational capabilities. Instead of cleaning data after discovering AI failures, systems validate and enrich information at ingestion. Quality issues get caught and corrected before they propagate through downstream applications.

This proactive model enables experimentation previously impossible. Data science teams can prototype new AI applications in days rather than months because the foundation already exists. Business units can deploy conversational interfaces or predictive analytics without rebuilding data infrastructure each time[.

More importantly, AI systems become trustworthy. When models consume consistently prepared, validated, enriched data, their outputs become reliable enough to inform critical decisions. Executives gain confidence deploying AI beyond experimental use cases into operations that directly impact revenue and customer experience.

Where preparation meets automation

The most advanced implementations connect data readiness directly to workflow automation. AI doesn’t just analyze prepared data—it triggers actions based on insights, creating closed-loop systems that operate with minimal human intervention.

When customer behavior patterns shift, AI-ready infrastructure enables models to detect changes, update predictions, and automatically adjust marketing campaigns or inventory allocations. The three-way synchronization ensures these AI-driven changes propagate correctly across all affected systems, maintaining consistency even as automation scales.

Making the commitment

Organizations serious about AI must recognize that readiness isn’t optional preparation—it’s foundational infrastructure as critical as network connectivity or cybersecurity. Treating it as anything less guarantees joining the 80-90% of failed initiatives.

The alternative means accepting that competitors investing in proper foundations will deploy AI faster, more reliably, and at greater scale. In markets where AI-powered personalization, prediction, and automation increasingly determine winners, data readiness becomes the capability that separates leaders from laggards.